How machine learning is embedded to support clinician decision making

Centre for health informatics

Research stream

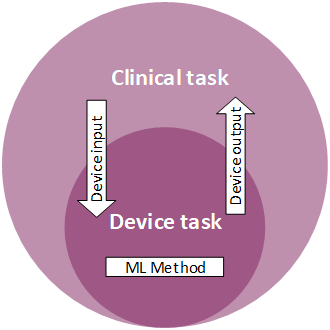

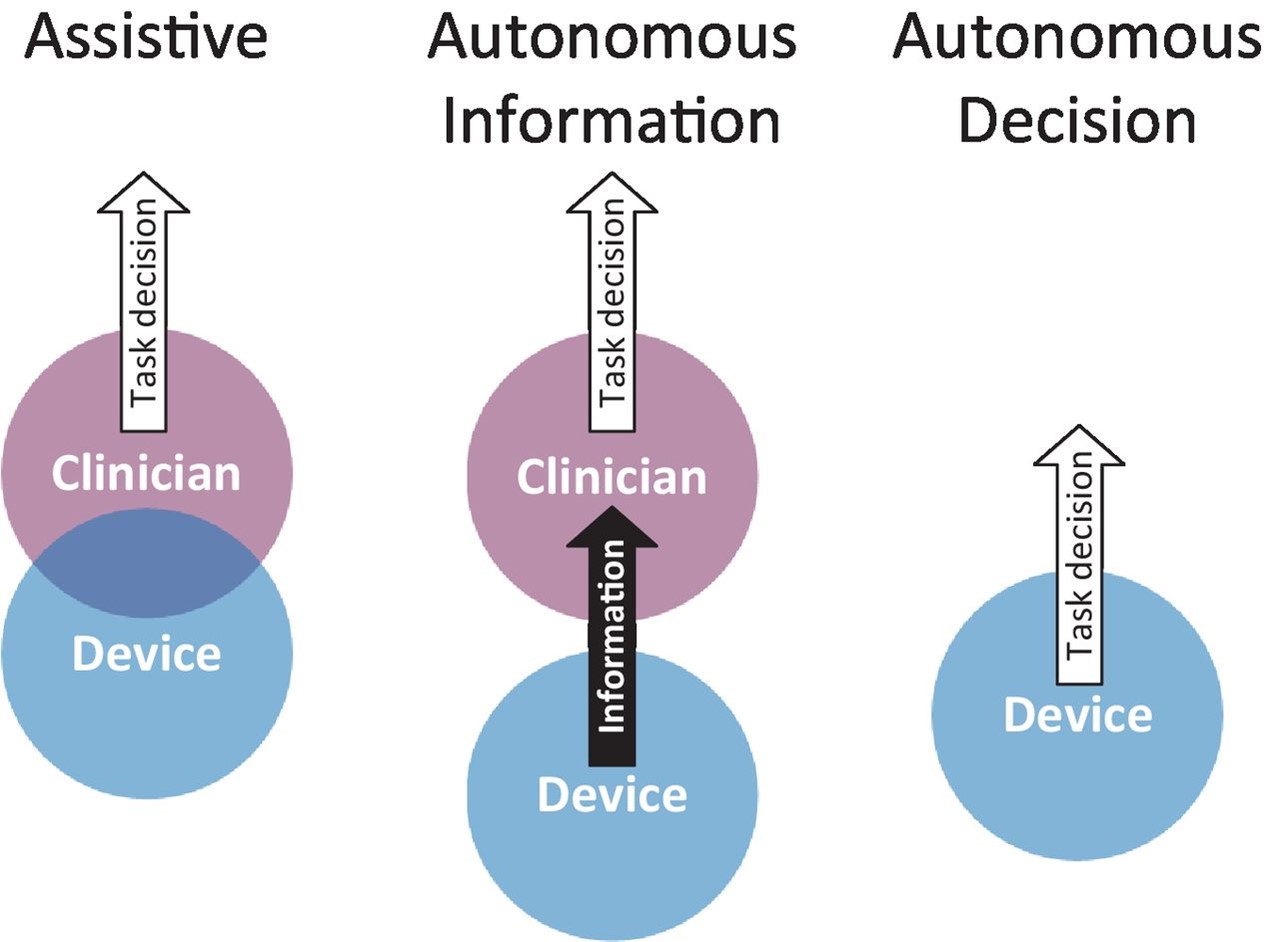

Level of autonomy showing the relationship between clinician and device.

Project members

Dr David Lyell

Dr Ying Wang

Professor Farah Magrabi

Professor Enrico Coiera

Project contact

Dr David Lyell

E: david.lyell@mq.edu.au

Project main description

While artificial intelligence (AI) has the potential to support clinicians in their decision making, translating that potential into real world benefit requires special focus on how Australian and international clinicians will work with and use AI to improve the safety and effectiveness of the healthcare provided to patients. Central to the clinician-AI relationship is how AI fits into the clinical tasks performed by clinicians, such as the diagnosis of disease, what AI contributes to those tasks and how AI changes the way clinicians work.

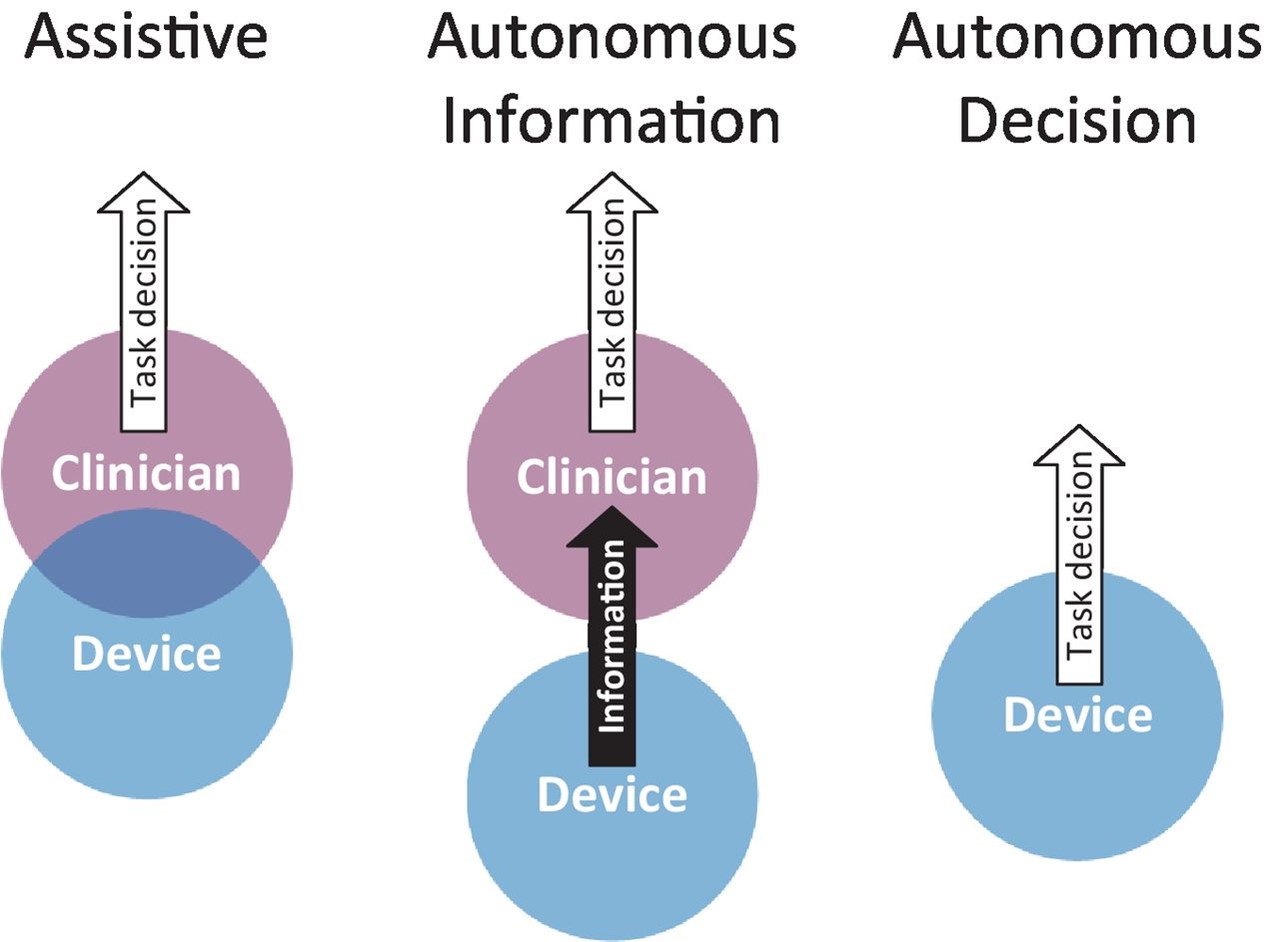

Figure 1. The relationship between machine learning based medical devices and the clinical tasks performed by clinicians.

Patient safety is at risk if AI does not properly support the tasks clinicians perform and how they work. Likewise, clinicians need training in how to safely and effectively work with AI, with understanding of its strengths and limitations. Many AIs are trained using only data from adults and are therefore not suitable for use with children.

Clinicians also need to appreciate the extent to which they can rely on AI, whether they can allow AI to function autonomously with output that can be acted on, or whether the AI output requires careful clinician supervision and approval. Ultimately, clinicians have responsibility for many tasks and their outcomes. Doctors are responsible for the diagnoses they make and the treatments they prescribe regardless of AI contribution. This is a situation similar to that of ‘self-driving cars’ which despite their marketing require constant driver supervision with a readiness to take control should problems arise. Consequently, traffic accidents involving failure of self-driving AIs are nearly always blamed on the driver.

The goals of this project are:

- To examine how and to what extent medical devices using machine learning support clinician decision making.

- To develop a framework for classifying clinical AI by level of autonomy.

- To characterise the types of technical problems and human factors issues that contribute to AI incidents.

- To develop requirements for safe implementation and use of AI.

Based on analyses of AI-based medical devices approved by the US Food and Drug Administration we propose a framework for classifying devices into three different levels of autonomy:

- Assistive devices – characterised by an overlap between the device and clinician. For breast cancer screening, both identify possible cancers, however clinicians are responsible for making decisions on what should be followed up and therefore must decide whether they agree with AI marked cancers.

- Autonomous information –characterised by a separation between what the device and the clinician contribute to the activity or decision. An example is an ECG that monitors heart activity, interprets the results, and provides the information, such as quantifying heart rhythm, which clinicians can use and rely on to inform decisions on diagnosis or treatment.

- Autonomous decision –where the device provides the decision on a clinical task that can be enacted by the device or the clinician. An example is the IDx-DR diabetic screening system in the US that can detect diabetic retinopathy. General practitioners can act on positive findings and refer those patients to specialists for diagnosis and treatment, without having to interpret retina photographs themselves.

Figure 2. The three levels of autonomy demonstrated by AI-based medical devices.

The framework will inform appropriate training, ensuring patient safety as AI-enabled devices become more commonplace in medical decision-making for diagnosis and treatment.

This new research was nominated as editor’s choice by the leading international BMJ Health and Care Informatics Journal and has been cited in the UK Chartered Institute of Ergonomics and Human Factors White Paper on AI in Healthcare from a Human Factors/Ergonomics perspective.

References

- Lyell D, Coiera E, Chen J, Shah P, Magrabi F. How machine learning is embedded to support clinician decision making: an analysis of FDA-approved medical devices. BMJ Health Care Inform. 2021;28.

Project sponsors

- The National Health and Medical Research Council Centre for Research Excellence in Digital Health (APP1134919)

Related projects

- Evaluating AI in real-world clinical settings

- Explainable AI in healthcare

- Automation in nursing decision support systems

- AI-enabled clinical decision support in resource constrained settings

Project status

Current

Centres related to this project

Content owner: Australian Institute of Health Innovation Last updated: 11 Mar 2024 6:25pm