Level 3, 75 Talavera Rd

Macquarie University NSW 2109

Research excellence

World-class researchers and cutting-edge research facilities drive our mission to change lives on a global scale.

Our research strengths and capabilities span:

- allied health

- biomedical and clinical sciences

- health systems and health informatics

- linguistics and hearing science

- psychology and cognition

- public health.

Research in our departments and schools

Learn more about the research strengths our schools and departments are engaged in.

Research facilities and services

Our research is supported by high-quality research facilities, including our PC2 laboratories, participant-based research and medical imaging capabilities, access to centralised Macquarie University research facilities, and our service units in biosample collection (biobanking), advanced surgery and clinical trials.

An introduction to key facilities is given below.

We have the largest single PC2 wet laboratory at Macquarie University (more than 1600 square metres), which operates as a shared facility for research within the faculty. It supports diverse biomedical research in areas such as neurodegenerative diseases (MND, dementia), neurobiology, neuropharmacology, cancer and clinical therapies. The laboratory is equipped with a range of specialist equipment, enabling:

- cell culture and blood processing

- state-of-the-art microscopy eg confocal, epifluorescence, lightsheet and high-content imaging

- histology

- analytical processing eg high-end LC-MS and Simoa systems

- flow cytometry and cell sorting

- next-generation sequencing

- in-vivo and in-vitro surgery.

For more information about these facilities, contact lab.operations@mq.edu.au.

This biobank focusses on collecting biological samples and clinical data from diverse cancer types here in Macquarie University Health as well as from other cancer services. See the Cancer Biobank website for more information.

This purpose-built facility houses extensive simulation equipment for research purposes. It includes:

- real-world driving and flight simulators

- motion-capture-equipped exercise physiology facilities

- virtual reality (VR) facilities including a room-scale 18.8m-wide 240-degree 3D projection system as well as four separate dedicated Multi-Agent VR systems that can be adapted between driving, flight and other forms of motion as required.

The hub allows our researchers to investigate aspects of human performance safely and accurately across of a range of environments and in conditions that closely resemble the real world.

For more information about these facilities, contact fmhhs.research.innovation@mq.edu.au.

Advanced medical imaging for clinical research and fundamental studies is provided through Macquarie Medical Imaging, located in the Macquarie University Hospital.

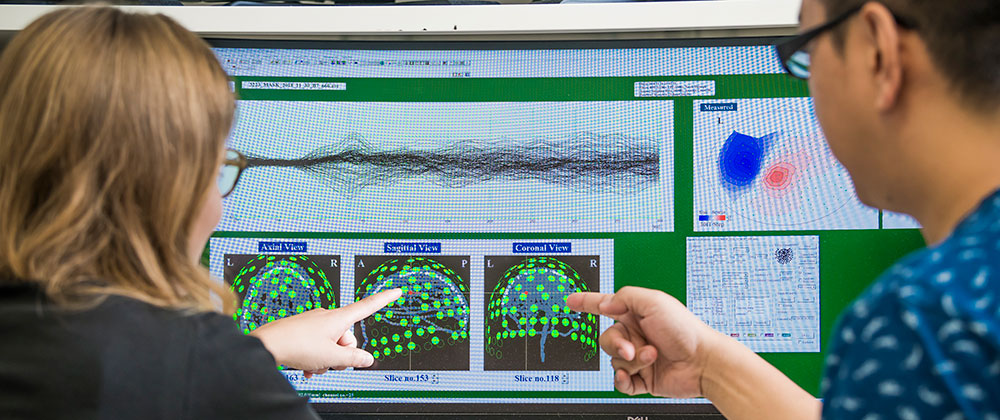

Our capabilities include two high-field 3-Tesla MRI scanners, a digital PET–CT scanner and a host of other imaging modalities, which are used for clinical care and for innovative clinical research. These capabilities, along with the FMHHS’ magnetoencephalography (MEG) facility, are a node of the NCRIS-funded Australian National Imaging Facility (NIF).

For more information about these facilities, contact the MMI research team at mmi.research@mqhealth.org.au.

These integrated facilities provide industry and clinicians with access to cutting-edge laboratories and expertise for development of, and training in, new surgical techniques. They provide capabilities in areas such as robotic surgery and the design and use of new types of orthopaedic implants, and they support a range of biomechanical research.

This rapidly growing biobank is one of the largest collections of motor neuron disease (MND, also known as ALS) samples and data in Australia, as well as holding samples from other neurodegenerative diseases. It primarily collects samples from the Neurology Clinic’s MND Service.

See the Neurodegenerative Disease Biobank for more information.

Our participant-based research facilities are operated under a shared-access model that ensures all our researchers have access to our wide range of infrastructure, including:

- Magnetoencephalography (MEG) – we have two whole-head MEG systems, including one specially designed for paediatric use. Both systems are capable of real-time processing of MEG data and feedback during an experimental session.

- Anechoic chamber – 80 square metres equipped with a 41-speaker array and 200-degree visual projection system.

- Neurophysiology – numerous facilities are available equipped with a range of electroencephalography (EEG), functional near-infrared spectroscopy (fNIRS) and transcranial Doppler ultrasound (fTCD) systems. We also operate Trans-cranial Magnetic Stimulation (TMS) systems for non-invasive brain stimulation.

- MRI – researchers have access to two MRI scanners on-campus (see medical imaging above) equipped for visual, auditory, olfactory and tactile stimulation of participants. Simultaneous EEG can also be recorded.

- Electrophysiology – we operate a hub of identical facilities for researchers who need to integrate electrophysiological measures. Primarily based on ADInstruments and BIOPAC systems, these tools give researchers access to GSR, ECG, EGG and many other measures.

- Eye-tracking – high-end SR-Research Eyelink eye-tracking systems are provided in both dedicated facilities as well as integrated throughout many other specialised facilities. We also operate a small fleet of Eyelink Duo and Tobii Glasses for mobile portable eye-tracking in the field.

- Speech physiology – multiple electromagnetic articulography (EMA) and ultrasound systems are available.

- Translation and Interpretation – our Bosch digital conference system allows for live, real-time interpretation of speakers in the studio in solo and group scenarios.

- Recording studios – several of our research facilities are housed in sound-attenuated rooms. Some of these are also set up as studios focused on human speech production and speech perception.

- Behavioural research facilities – we have an extensive suite of rooms, all equipped with identical base computers optimised and configured to provide accurate and consistent data collection.

For more information about these facilities, contact fmhhs.research.innovation@mq.edu.au.

Our research centres

Find out more about the Macquarie University research centres that we host and our faculty research centres.