From probably private to provable privacy — on the need for rigorous privacy treatment for data-driven organisations.

Dali Kaafar (Macquarie University, CSIRO Data61), Hassan Asghar (Macquarie University, CSIRO Data61), Raghav Bhaskar (CSIRO Data61)

The increasing number of privacy breaches on so-called “de-identified” data only reinforces the belief that traditional approaches to data privacy are inadequate to provide privacy guarantees and are deemed to disappoint us over and over again. We argue that it is time to start investing in provably private data processing mechanisms in lieu of « probably private fixes » developed over the years and that have passed their use-by date.

Beyond the etymological debate about the “Big Data” origins, there is arguably a very wide agreement that data is pervasive and plentiful. Data is everywhere to the extent that we hardly envision any new technological advances in major industrial sectors without involving data. From precision agriculture, smart energy and transportation to smart healthcare, the world seems to have “finally” shifted to the smart paradigm that clearly is shaping the rise of a revolutionary data-driven economy. According to McKinsey Global institute, open data and shared data from private sources can help create $3 trillion a year of value in seven areas of the global economy.

With these potentials however come greater concerns and responsibilities not only for protecting individuals privacy but also for ensuring organisations’ key asset --data, is safe and kept confidential while still being used for what it is the most valuable for. That is extracting analytics and insights for effective decision making, optimizing key services or uncovering new business opportunities. The promise of Data marketplaces is becoming a reality. Just as shares and currencies are traded on different types of exchanges, different types of data for a variety of data analytics scenarios could potentially be monetized in different types of marketplaces.

Personal data however cannot be used without full transparency on how it is used and with whom it is shared, or with little guarantees on the safety of the data process, across the overall data lifecycle. Failing that can have far-reaching consequences for data protection rights of individuals and as recently witnessed the very democratic process.

In such a context, data privacy is often perceived as a hindrance to business development and data monetization. Opportunities that could be enabled by collaborating on data seem to be currently inhibited either due to concerns of not meeting the necessary privacy regulations, controls and compliance or doubts about the loss of some competitive advantage or most likely both. In practice however, enormous resources are spent by organisations, public and private alike, to make sure their data is protected and their data sharing processes (internal or with external collaborators) satisfy some privacy guarantees.

Too often however, organisations make the news headlines with privacy scandals, poorly anonymized logs and datasets pulled after re-identification concerns. Implications can be very serious. In 2015, a study revealed that 57% of European consumers are worried their data is not safe and 81% of them believe their data holds significant value. In Australia, according to the Deloitte Australian Privacy Index of 2016, nine consumers out of 10 would avoid doing business with companies that they think do not protect their privacy. Perhaps even more striking, and beyond the simple attitude, the numbers are also showing that consumers do take action, with 59% of Australians having decided not to deal with a company due to Privacy concerns. Put simply, the stakes are too high for companies to ignore the privacy consideration and as such, there’s no worse for a data protection officer or a chief Information officer of any organisation than making such headlines.

Traditional Approaches to Data Privacy: The Myths Pyramid

Unfortunately, there is a lot worse than making these news headlines, and that is, making these headlines while being convinced that everything has been done right. Companies understand and recognize that using data comes with privacy risks. Very often, boards executives, privacy officers and data scientists within these organisations would have heard about re-identification risks for instance. This is the most common threat that data-driven organizations are faced with and they certainly take actions to prevent their data sharing processes from being vulnerable to re-identification.

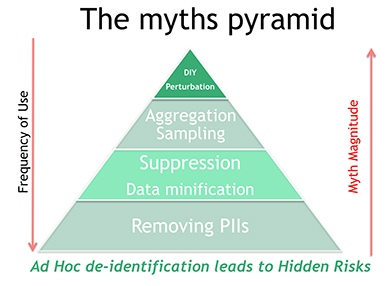

Yet, they learn usually the hard way, that traditional privacy preserving techniques they have been using are inefficient, not fit for purpose or altogether missing. These techniques ranging from removing personally identifiable information to simple aggregation techniques and suppression or sampling as well as some DIY perturbation mechanisms are generally applied as patchy solutions. Often times, they are even considered as measures to “check a box” and meet some compliance requirement.

Most of these techniques sound intuitive enough to convince the different stakeholders that the data is probably private. Unfortunately, there are some big limitations for the so-called “de-identification” techniques. In a very nice essay from 2014, Narayanan and Felten explained how “de-identification” fails to resist inference of sensitive information either in theory or in practice and why attempts to quantify its efficacy are unscientific.

An illustrative example of ad-hoc perturbation techniques is to add random noise to data and expect it to be “de-identified”. A useful analogy to show the inefficacy of some potentially intuitive DIY techniques is the telling and retelling of a story, with random details altered each time to protect the privacy of the principals. If all the versions are collected and collated, the obscured details would emerge. This is a potentially counterintuitive phenomenon colloquially known as the Fundamental Law of Information Recovery.

In a recent study, we have also shown the vulnerability of an example of such perturbation algorithms as used by the Australian Bureau of Statistics for TableBuilder, an online tool which enables users to create tables, graphs and maps of Australian census data.

We demonstrated how an attacker, who may not know the perturbation parameters, can not only find any hidden parameters of the algorithm but also remove the noise to obtain the original answer to some query of choice. None of the attacks we presented depend on any background information.

Breaches to privacy have caused widespread disruption in democratic processes and commercial enterprises, and the response by governments has been almost always one of policy and governance frameworks. There has been recently a number of governance frameworks and semi-technical descriptions of approaches to tackle the problem of re-identification risks while accessing shared or openly released data (e.g. de-identification decision making framework, Data Sharing Frameworks , the five safes framework) aiming to deliver a form of guidelines for best practices for data confidentiality and privacy. While there is some usefulness in adopting some of the recommendations from these initiatives, in particular from a data governance perspective by getting data custodians to resist the temptation of using and sharing the data with no preliminary and basic checks, the risk of these frameworks is again associated with the lack of scientific ground to assess their efficacy and the threat of promoting of a false sense of security.

Sadly, some of the arguably inefficient traditional approaches are very popular and most of the time they are being considered as the ultimate techniques to “de-identify” data creating some risky myths and a very profound false sense of privacy. Of particular concern is the belief that some of these techniques are safer because they adopt an approach that is intuitive enough to be considered secure. The Myth pyramid shown below just represents how often some of these probably private techniques are used. Simpler techniques are used more often but then more “complex” techniques do not guarantee any better privacy. Perhaps, the one guarantee they offer though is that they are recipes for disasters just waiting to happen.

Several of the traditional perturbation techniques and alike have been comprehensively documented under the principle of statistical disclosure control (also referred as Statistical Confidentiality). One key issue that has been clearly identified is that practitioners ultimately don’t know what notion of privacy is achieved. For instance, a common limitation is the lack of understanding of what is the compound privacy impact of releasing two tables from the same data through these techniques. Another major problem is their over-reliance on assumptions about the available background information (also called auxiliary information) to be used for re-identification.

Several of the traditional perturbation techniques and alike have been comprehensively documented under the principle of statistical disclosure control (also referred as Statistical Confidentiality). One key issue that has been clearly identified is that practitioners ultimately don’t know what notion of privacy is achieved. For instance, a common limitation is the lack of understanding of what is the compound privacy impact of releasing two tables from the same data through these techniques. Another major problem is their over-reliance on assumptions about the available background information (also called auxiliary information) to be used for re-identification.

How should we approach Data Privacy?

Harold Wilson, a British PM once famously said, “I'm an optimist, but an optimist who carries a raincoat”.

Retrieving high quality statistics from data without revealing information about individuals is a longstanding and open problem. The fundamental premise of the quest to solve this challenge has been to find the optimal tradeoff between privacy guarantees and statistical utility. This approach stems from being pragmatic about the need for data to be useful (and used) in one way or the other while still being optimistic about not giving away individual's privacy. The Pragmatic-Optimist attitude seems to be the way to move forward in a world where various revolutionary data-driven technologies are marching.

Designing privacy-preserving technologies enabling such a tradeoff is complex. First, it involves the engineering of algorithms, preferably as generic and data-independent as possible that capture the notion of utility of the data, but perhaps more importantly that measure the potential risks over the various uses of the data. Secondly, it requires the ability to directly control such algorithms and choose the appropriate settings leading to an optimal accuracy of the data querying versus privacy loss tradeoff.

The first problem lies in the domain of computer science. The second lies in the domain of economics, ethics and policy making.

“Computer science got us into this mess, can computer science get us out of it?” (Dr. Latanya Sweeney, 2012).

In order to get to choose the optimal settings though, economists and policymakers would need a currency they would reason about and work with: a measure of privacy loss, if any.

Without a proper quantification of the notion of privacy loss, any effort will likely be just yet another intuitive approach but not provably secure.

Privacy protection is not necessarily about intuitive approaches or just governance and compliance. Firstly, most if not all ad-hoc techniques would lead to unknown and hidden risks. The complexity introduced by the challenge of preserving individuals privacy while still being able to retrieve some useful statistics out of the data in use requires a mathematically rigorous theory of privacy and its loss. Secondly, without a way to properly measure the privacy guarantees or the lack thereof, frameworks would be just fancy documents sitting in cupboards with data scientists ungeared to implement the nice guidelines and best practices.

The need for a mathematically rigorous definition of Privacy (and the lack thereof)

In order to provide protection of some sort, one needs to clearly understand what is it that needs protection in the first place. In the area of privacy-preserving data analysis, in which some statistics from a possibly sensitive dataset are to be learned and released, we need to first identify what constitutes a privacy violation.

Before contemporary attempts at tackling this question, a vague understanding of privacy as “anonymity” was commonplace. Practitioners released datasets “sanitised” in a way so as to avoid linking data to real individuals; called de-identified datasets. Such techniques range from the simple (removing obvious identifiers such as names and addresses) to the more sophisticated (aggregation, sampling, simple perturbation, etc.). A plethora of subsequent studies have shown that this practice provides scant privacy guarantees, as individuals can be re-identified, in many cases, by linking the released datasets to other public sources of information.

A key reason for the failure of these de-identification techniques is a lack of formalism and limited understanding of the capabilities of the adversary. Consequently, it is unclear if a particular technique is sufficient for privacy, e.g., what level of generalisation is enough to protect against linking?

The next series of privacy definitions attempted to formalize the notion of anonymity and linkability resulting in the notion of k-anonymity and its variants (l-diversity, t-closeness). These notions tried to ensure that there were a reasonable number (controlled by the privacy parameter) of records that the attacker would have to deal with, even when she looked at any subset of field values, thereby making it impossible to be certain about any individual’s record and thus the inability of the attacker to link a record in the dataset with any public source of PII. These privacy notions could be achieved by analysing the dataset itself, without consideration of any other dataset that may also include information about the participants of the dataset. Although providing protection against re-identification, k-anonymity and its variants are susceptible to other privacy attacks such as inferring data of a target individual through for instance an intersection attack over multiple data releases.

The example above shows that holistic privacy guarantees cannot be achieved by constructing definitions that target specific privacy attacks, e.g., re-identification. Thus there was a need to think about data privacy in more general terms: what definition of privacy would withstand all known and unknown (future) attacks; as well as arbitrary background information?

One such early attempt can be traced back to Tore Dalenius who defined privacy as the data analyst learning nothing new about any individual from a released dataset, i.e., the data analyst’s knowledge of an individual after accessing the dataset remains the same as before accessing the dataset. This came to be known as the goal of “perfect privacy.” This is similar to definition formation in the area of cryptography where the notion of semantic security was proposed to formalise the notion of encryption. However, since the whole purpose of data analysis is to learn something new from the dataset, ultimately one learns something about the individuals in the datasets as well. For instance, if a dataset reveals that customers in their 70s have the proclivity to buy a particular product, then knowing just this piece of information reveals some information about your grandparents (even if the dataset never contained their data!). Releasing this dataset with perfect privacy would mean even this information needs to be withheld, resulting in an entirely useless data release. Thus, perfect privacy was too strong to yield any meaningful “utility.”

Luckily, this cryptographic way of defining privacy did not have to be completely overhauled. Instead of reasoning about the “before-vs-after”, a notion known as differential privacy was proposed. This new notion of privacy is achieved when the knowledge of the analyst about an individual remains “almost similar” after access to a dataset that includes that individual’s record as opposed to a dataset that does not. Implicitly, this definition does not consider anything that can be learned about an individual without her data as a privacy violation, since the individual has no control over other’s data and such information is the very purpose of data analysis, as exemplified above. Thus, as long as the knowledge gained about an individual from these two kinds of dataset is close (captured by a privacy parameter) to each other, the privacy notion is satisfied. Additionally, by introducing a notion of a ‘privacy budget’, it formally captures the learning that repeated disclosures about a dataset weaken the privacy of individuals and thus need to be part of any privacy analysis. And perhaps the single most important feature of this framework is the ability to achieve this notion of privacy without having to study the background information available to the analyst.

If it is private, you can prove it!

The above summarises the definitional approach to privacy. One first proposes a privacy definition which states what does it mean for a process to be privacy-preserving. Practitioners can then construct programs/algorithms that satisfy the privacy definition. That is, one proposes a technique (an algorithm or a program) and proves that it satisfies the definition of privacy. The dataset released through this technique then automatically inherits the properties of the privacy definition. A major advantage of this approach is that researchers can concentrate their efforts into dissecting the privacy definition itself to assess if it violates plausible privacy issues. If the definition is found weak, concerted efforts can be made to replace it with an improved definition; leading to further progress in our understanding of privacy as well as more robust privacy definitions.

From the early days of vague and incomplete privacy notions and ad hoc privacy solutions, to the current era of differential privacy, research in data privacy has shifted from heuristic approaches to the provable-privacy paradigm. Of course, past attempts and failures have contributed into the now fairly mature understanding and practice of data privacy. Despite this progress, there is still significant resistance to this new approach of tackling privacy. Multiple factors might be in play: less than ideal utility (which may be impossible for even a very weak notion of privacy), considerable investment in the old technology (providing for instance “de-identification as a service”), the need to only tick boxes when it comes to privacy (e.g., just satisfying current privacy laws, no matter how outdated), lack of understanding of more rigorous privacy definitions (the field of anonymization and statistical disclosure control is comparatively intuitive for data scientists and statisticians), etc. Most of these objections are not anti-progressive per se; if utility is not good enough, one can prioritize research into algorithms that improve on the state-of-the-art.

This is not to say that differential privacy, exemplified above as an instance of provable-privacy practice, is the panacea. On going research is trying to find relaxations and variations of this notion to seek a better tradeoff between privacy and utility. Furthermore, differential privacy is tied to one particular privacy problem, i.e., data analysis in which we do not wish the analyst to tie information back to individuals. There are other privacy problems which are not tackled by differential privacy. Privacy-preserving record linkage is one such problem. In this problem two entities each with a sensitive dataset would like to find common individuals without revealing anything about other individuals. Likewise, privacy-preserving outsourcing requires that the entity performing analysis on data (on your behalf) learn nothing (not even the output of analysis). Each set of these privacy problems require their own privacy definitions (in both cases, differential privacy is not a meaningful notion). The practice of provable-privacy takes the field of data privacy out of the realm of ambiguity and bewilderness, to the arena of transparency and rigorous scientific discourse.

The AI revolution could be risky if privacy is not built into the design

With Machine Learning As A Service (MLAAS) becoming a reality, the threat to our privacy is already at higher risk.As the world is heavily adopting Machine learning techniques for various applications with large corporations, such as Amazon and Google, already providing machine learning as a service on their AI platforms for various organisations to generate analytics, improve sales efficiency and potentially generate revenue by selling access to trained data-driven models. Machine learning as a service is spreading rapidly across businesses despite evidence of severe private information leakage. Machine learning models can be stolen and reverse engineered. Individuals can be re-identified with several researchers already raising major concerns and showing that sophisticated machine learning techniques can be used to defeat other AI techniques, identifying individuals’ private data used in the training of these systems. This is yet an additional alarm to recall us for the need to provide provably private algorithms that guarantee a control over the privacy risks we would be taking.

Recent uptake of differential privacy by a few major corporations (Apple, Google, Microsoft, Uber) and government organizations (US Census Bureau) indicates that the wheels of provable-privacy are turning away from the old days of ad hoc de-identification techniques. As argued, differential privacy is just one instance of the application of provable-privacy; there are other data access scenarios and requirements, where differential privacy is inapplicable and there is a need to propose rigorous privacy definitions. The future of privacy practice is thus the need to argue about privacy in a mathematically rigorous way. Only then we can be certain that we won’t repeat mistakes of the past.

It is critically important for data practitioners, scientists, policy makers, data privacy officers and more generally data custodians and stakeholders to recognize the fact that any data analysis service is at risk of leaking information about individuals. There is perhaps a general consensus about this. However, knowing how to measure and quantify this residual risk, and understanding the key parameters in developing tradeoffs between privacy guarantees and utility of the data in use is crucial. The cost of achieving privacy might be high, but the costs associated with privacy violations are certainly even higher.